Photo by Peter Pryharski on Unsplash

Fastparquet: A Guide for Python Data Engineers

A straightforward approach to using parquet files in Python

Recently I was on the path to hunt down a way to read and test parquet files to help one of the remote teams out. The Apache Parquet file format, known for its high compression ratio and speedy read/write operations, particularly for complex nested data structures, has emerged as a leading solution in this domain. Fastparquet, a Python library, offers a seamless interface to work with Parquet files, combining the power of Python's data handling capabilities with the efficiency of the Parquet file format. I wanted to capture what I found and thought I would write down a step by step guide in case it is also useful for others.

Getting Started with Fastparquet

Setting Up Your Environment

First things first, you'll need to install Fastparquet. Assuming Python is already up and running on your system, Fastparquet can be easily added using pip, the Python package manager. Open up your terminal or command prompt and execute:

pip install fastparquet

This command installs Fastparquet along with its necessary dependencies, setting the stage for your work with Parquet files.

Writing Data to Parquet

Fastparquet enables straightforward writing of Pandas DataFrame objects to Parquet files, preserving the data schema. Here's a quick example:

import pandas as pd

from fastparquet import write

# Sample DataFrame

data = {'Name': ['John', 'Anna', 'Peter', 'Linda'],

'Age': [28, 34, 29, 32],

'City': ['New York', 'Paris', 'Berlin', 'London']}

df = pd.DataFrame(data)

# Writing to Parquet

write('people.parquet', df)

In this code snippet, we create a DataFrame and utilize write from Fastparquet to save it as 'people.parquet'.

Reading Parquet Files

Retrieving data from a Parquet file into a DataFrame is easy too:

from fastparquet import ParquetFile

# Load and convert to DataFrame

pf = ParquetFile('people.parquet')

df = pf.to_pandas()

print(df)

Here, the ParquetFile class reads the Parquet file, enabling easy conversion back to a DataFrame.

Why Parquet?

Understanding the core advantages of Parquet itself is worthwhile. Parquet is a columnar storage file format offering superior compression and efficiency for analytical queries, thanks to several key features:

Columnar Storage: Data is stored by column rather than row, enhancing compression and encoding efficiency.

Compression and Encoding: Various schemes are employed to reduce storage requirements and speed up data access.

Complex Data Types: Parquet supports nested data structures, such as lists and maps.

Schema Evolution: New columns can be added to datasets without altering existing data, facilitating iterative development.

These features make Parquet particularly advantageous for big data and real-time analytics, offering significant performance improvements and cost savings. In the world of cloud this file format saves time and money in storage and compute.

Advanced Features

Fastparquet isn't just about reading and writing data; it also offers functionalities that tap into the more sophisticated aspects of Parquet.

Partitioning and Filters

Partitioning creates a folder and divides your dataset into multiple files based on column values, which can drastically improve query performance. Fastparquet simplifies working with such datasets:

# Specify the output directory

output_dir = 'people_partitioned'

# Specify the output directory

output_dir = 'people_partitioned'

# Writing to Parquet with partitioning

write(output_dir, df, partition_on=['City'], file_scheme='hive')

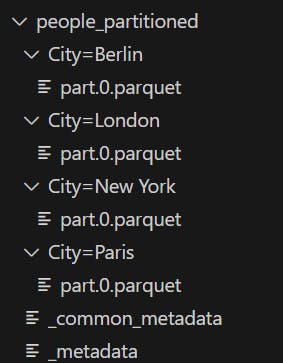

You'll see the data split out into partitioned folders like this

To read the partitioned data you simply reference the folder that was created

from fastparquet import ParquetFile

# Load and convert to DataFrame

pf = ParquetFile('people_partitioned')

df = pf.to_pandas()

print(df)

Filters can also be applied to read operations using the pandas syntax, allowing for efficient data retrieval:

# Reading with filters

df = pf.to_pandas(filters=[('Age', '>', 30)])

Compression

With Fastparquet, you can specify compression codecs (e.g., GZIP, SNAPPY) to reduce file sizes further:

# Writing with compression

write('people_compressed.parquet', df, compression='GZIP')

Optimizing Your Data Workflow

Next time you have a giant csv or spreadsheet and need to do some queries. Why not give Fastparquet a go? Turn your csv into parquet files :)

Here is an example with the same sample data in a csv.

import pandas as pd

from fastparquet import write

# Replace 'people.csv' with the path to your actual CSV file

df = pd.read_csv('people.csv')

# Specify the output directory for the partitioned Parquet files

output_dir = 'people_partitioned'

# Write the DataFrame to a partitioned Parquet file, partitioned by 'City'

write(output_dir, df, partition_on=['City'], file_scheme='hive')

Conclusion

Fastparquet stands out as a powerful tool for Python data engineers, bringing the efficiency and performance of the Parquet file format to the Python ecosystem. By following the guidelines and examples presented in this guide, you can start integrating Fastparquet into your data processing pipelines, enhancing your data handling and analysis capabilities. Whether dealing with large-scale data processing or requiring swift data analytics, embracing Fastparquet and the Parquet format will significantly benefit your projects, making data storage and retrieval tasks more efficient and cost-effective.